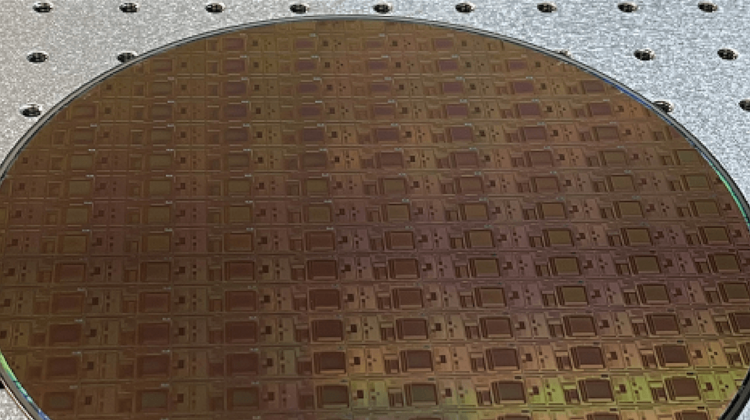

A team led by researchers at the University of South Carolina’s Viterbi School of Engineering has developed a new type of computer chip that it believes has the best memory ever created for edge AI (AI in portable devices).

The power and sophistication of software is limited by the hardware on which it runs. According to USC professor of electrical and computer engineering Joshua Yang, hardware says become a bottleneck for further development. For decades, the size of the neural networks needed for AI and data science applications has doubled every 3.5 months while the hardware capability needed to process them has doubled only every 3.5 years.

Efforts to address this hardware challenge have typically focused either on silicon chips or new types of materials and devices. Yang’s work falls in the middle, focusing on exploiting and combining the advantages of new materials and traditional silicon technology.

The team’s recent breakthrough, which relates to a new understanding of fundamental physics, has led to a drastic increase in the memory capacity needed for AI hardware. The researchers developed a protocol for devices to reduce ‘noise’ and then demonstrated the practicality of using this protocol in integrated chips. According to Yang, the new memory chip the team has developed has the highest information density per device (11 bits) of any known memory technology. Such small but powerful devices could play a critical role in bringing incredible power to mobile devices. The chips aren’t just for memory but also for the processor, and millions of them in a small chip, working in parallel to rapidly run AI tasks, would only require a small battery.

The chips combine silicon with metal oxide memristors in order to create powerful but low-energy-intensive chips. The technique focuses on using the positions of atoms to represent information rather than the number of electrons as in current chips. The positions of the atoms offer a compact and stable way to store more information in an analogue, instead of digital fashion. Moreover, the information can be processed where it’s stored instead of being sent to one of the few dedicated ‘processors’, eliminating the so-called ‘von Neumann bottleneck’ that exists in current computing systems. In this way, according to Yang, computing for AI is ‘more energy efficient with a higher throughput’.

Yang explained that electrons that are manipulated in traditional chips are ‘light’, which makes them prone to moving around and being more volatile. However, instead of storing memory through electrons, Yang and his collaborators are storing memory in full atoms.

Normally, when a computer is turned off, the information memory is gone. Bringing it back to run a new computation costs both time and energy. The new method, which focuses on activating atoms rather than electrons, doesn’t require battery power to maintain stored information. In AI computations, a stable memory capable of high information density is crucial. Further, converting chips to rely on atoms as opposed to electrons means that the chips become smaller, meaning more computing capacity at a smaller scale.

The research has been published in Nature.