Researchers at Fraunhofer-Gesellschaf, Europe’s largest application-oriented research organisation, located in Germany, are developing a way to integrate radar and LiDAR (light detection and ranging) into the headlights of self-driving vehicles, creating a more space-efficient design.

Today’s vehicles are increasingly taking over driving functions, automatically maintaining the correct distance from the car in front, correct the vehicle’s path if it begins to leave its lane and braking suddenly if the driver is caught off guard. All this is possible thanks to cameras in the passenger area and radar sensors in the radiator grill. Full autonomy will likely require even more sensors, but car designers aren’t keen on cramming them into the grill.

Five Fraunhofer institutes, including the Institute for High Frequency Physics and Radar Techniques FHR, have joined forces as part of the Smart Headlight project to create a method of installing sensors that’s both space-saving and as subtle as possible – without compromising on function or performance. The project’s aim is to develop a sensor-integrated headlight for driver-assistance systems that makes it possible to combine a range of sensor elements with adaptive light systems. It’s hoped that this will improve sensors’ ability to identify objects on the road – and especially other road users, such as pedestrians. For example, LiDAR sensors can be used in electronic brake-assist or distance-control systems.

‘We’re integrating radar and LiDAR sensors into headlights that are already there anyway – and what’s more, they’re the parts that ensure the best possible transmission for optical sensors and light sources, and are able to keep things clean,’ said Tim Freialdenhoven, a researcher at Fraunhofer FHR.

LiDAR sensors operate by determining the time between a laser pulse being emitted and the reflected light being received, a method that produces exceptionally precise distance measurements.

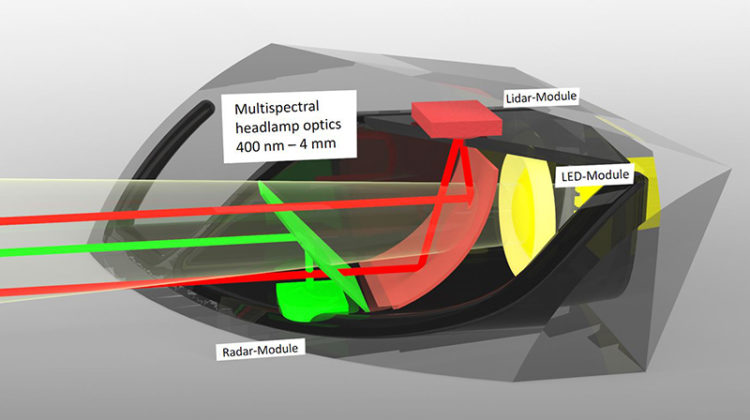

The first stage in creating headlight sensors involves designing a LiDAR system that’s suitable for integration into automotive technology. This also needs to consider the fact that the light beamed onto the road by the headlight cannot be impeded by the two additional sensors, even though the LEDs that are responsible for the light are located far back in the headlight. For this reason, the researchers are positioning the LiDAR sensors at the top and the radar sensors at the bottom of the headlight casing.

At the same time, the beams from both sensor systems need to follow the same path as the LED light – something that is made more difficult by the fact that all of the beams involved have different wavelengths. The visible light from the headlight is in the region of 400–750 nanometres, while infrared LiDAR beams range from 860 to 1,550 nanometres. Radar beams, on the other hand, have a wavelength of four millimetres.

‘These three wavelengths need to be merged coaxially – that is, along the same axis – and this is where what we call a multispectral combiner comes in,’ said Freialdenhoven. Guiding the beams coaxially in this way is crucial for preventing parallax errors, which are complicated to untangle.

Arranging the sensors next to one another would also take up significantly more space, so the researchers are getting around this using what are known as bi-combiners. They combine LED light and LiDAR light using a dichroic mirror with a special coating, guiding the two beam bundles along a single axis by means of wavelength-selective reflection. The same effect happens in the second combiner, where the LED light, LiDAR light and radar are combined. As radar sensors are already in widespread use in the automotive sector, bi-combiner designs have to allow manufacturers to continue using existing sensors without the need for modifications.

So why combine optical systems, LiDAR and radar at all? ‘Each individual system has its strengths, but also its weaknesses,’ Freialdenhoven explained. Optical systems, for example, demonstrate limited performance in situations where visibility is poor, such as foggy and dusty environments. Radar systems, on the other hand, are able to take dense clouds of fog in their stride but aren’t very good at categorisation – although they’re able to tell whether something is a person or a tree, their abilities have nothing on LiDAR systems.

‘We’re also working on merging data from radar and LiDAR – which will add huge value, especially when it comes to reliability,’ said Freialdenhoven. The team has already submitted a patent application and is now working on creating a prototype.

The technology is set to create a whole host of additional options for integrating sensors into driver-assistance systems. Smaller light modules, more compact LiDAR sensors and integrated radar sensors will make it possible to create multi-sensor concepts – particularly with a view to self-driving vehicle technology, where design requirements are becoming more exacting and installation space is limited. As a result, future self-driving systems may be able to not only detect a person, but also analyse their speed, how far away they are and the angle at which they are positioned in relation to the vehicle.